基于流处理器编程模型和集群架构的研究

I摘要今天,GPU作为可编程通用流处理器的代表,其性能飞速发展,已经打破CPU发展所遵照的摩尔定律。而且,GPU利用其可编程性和功能扩展性来支持复杂的计算和处理,这个特性已经得到业界的公认。在架构上,主流GPU都是采用统一的流体系架构,并且实现了细粒度的线程间通信,大大扩展了通用领域的应有范围。因此,基于GPU的通用计算模型的研究也成为当今研究的焦点。NVIDIACUDA编程模型的出现使基于GPU的通用计算(GPGPU)得到了突破性的发展,传统GPGPU的Cg和Brook+编程模型慢慢将退出历史的舞台。利用GPU大规模的线程级并行处理能力,可以将GPU作为计算的通用计算平台;通过构建高性能集群...

相关推荐

-

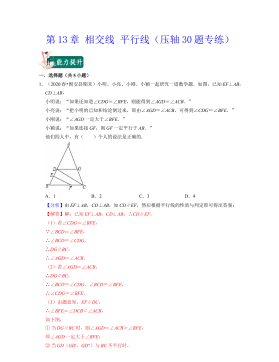

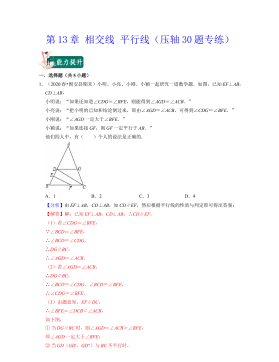

七年级数学下册(易错30题专练)(沪教版)-第13章 相交线 平行线(原卷版)VIP免费

2024-10-14 25

2024-10-14 25 -

七年级数学下册(易错30题专练)(沪教版)-第13章 相交线 平行线(解析版)VIP免费

2024-10-14 28

2024-10-14 28 -

七年级数学下册(易错30题专练)(沪教版)-第12章 实数(原卷版)VIP免费

2024-10-14 27

2024-10-14 27 -

七年级数学下册(易错30题专练)(沪教版)-第12章 实数(解析版)VIP免费

2024-10-14 19

2024-10-14 19 -

七年级数学下册(压轴30题专练)(沪教版)-第15章平面直角坐标系(原卷版)VIP免费

2024-10-14 19

2024-10-14 19 -

七年级数学下册(压轴30题专练)(沪教版)-第15章平面直角坐标系(解析版)VIP免费

2024-10-14 27

2024-10-14 27 -

七年级数学下册(压轴30题专练)(沪教版)-第14章三角形(原卷版)VIP免费

2024-10-14 19

2024-10-14 19 -

七年级数学下册(压轴30题专练)(沪教版)-第14章三角形(解析版)VIP免费

2024-10-14 30

2024-10-14 30 -

七年级数学下册(压轴30题专练)(沪教版)-第13章 相交线 平行线(原卷版)VIP免费

2024-10-14 26

2024-10-14 26 -

七年级数学下册(压轴30题专练)(沪教版)-第13章 相交线 平行线(解析版)VIP免费

2024-10-14 22

2024-10-14 22

相关内容

-

七年级数学下册(压轴30题专练)(沪教版)-第15章平面直角坐标系(解析版)

分类:中小学教育资料

时间:2024-10-14

标签:无

格式:DOCX

价格:15 积分

-

七年级数学下册(压轴30题专练)(沪教版)-第14章三角形(原卷版)

分类:中小学教育资料

时间:2024-10-14

标签:无

格式:DOCX

价格:15 积分

-

七年级数学下册(压轴30题专练)(沪教版)-第14章三角形(解析版)

分类:中小学教育资料

时间:2024-10-14

标签:无

格式:DOCX

价格:15 积分

-

七年级数学下册(压轴30题专练)(沪教版)-第13章 相交线 平行线(原卷版)

分类:中小学教育资料

时间:2024-10-14

标签:无

格式:DOCX

价格:15 积分

-

七年级数学下册(压轴30题专练)(沪教版)-第13章 相交线 平行线(解析版)

分类:中小学教育资料

时间:2024-10-14

标签:无

格式:DOCX

价格:15 积分